As you probably know by know, I’m traveling for a year with my family around the world. As I don’t want to miss out taking pictures, flying my drone and capturing movies with the iPhone or GoPro I brought along all these toys. Capturing movies and shooing RAW with my Nikon D850 quickly creates a lot of data. And how can you handle all this?

Content creation

Sources

- Nikon D850

- RAW

- Video

- GoPro

- RAW

- JPG

- Video

- iPhone

- JPG

- Video

- AHVC

- Drone – DJI Mavic Air

- RAW

- Video

- HDR

- Panorama

- JPG

I have bought a new Laptop prior our departure, a Lenovo X1 Extreme with 1 TB disk space. In order to have enough local space, I bought a 2nd nVME SSD of 1TB capacity.

I’ve written a little script to create a folder structure for each day or event. This makes sure that I save the content into the right location.

#!/bin/bash Picture_path=Pictures_2019/TOPLevelPath/ #Date=$(date "+%Y_%m_%d_%n") NewPath="$1" echo "create new Picture folder: $NewPath " #echo $Picture_path cd $Picture_path mkdir -p "$NewPath/Nikon/RAW" mkdir -p "$NewPath/Nikon/Video" mkdir -p "$NewPath/GoPro/Video" mkdir -p "$NewPath/GoPro/RAW" mkdir -p "$NewPath/Drone/Video" mkdir -p "$NewPath/Drone/HDR" mkdir -p "$NewPath/Drone/Pano" mkdir -p "$NewPath/Drone/RAW" mkdir -p "$NewPath/iPhone/Video" mkdir -p "$NewPath/iPhone/Images" mkdir -p "$NewPath/4k" echo "done creating all subfolders"

I tried to write a script which would import the content from each source based on the creation date, but this turned out to be quite a mess in Windows (using Windows-Linux-System Ubuntu) as MPT (GoPro & iPhone) are not directly accessible. Further there is no official file field where the creation date of a file is stored, only the last modified. So I gave up with this idea.

Further I had the need to rename my GoPro files, as GoPro has a awkward naming system, and messes up my sorting in Davinci Resolve. With the use of the exiftool the name is set based on the creation time and date:

#!/usr/bin/env bash

cd

exiftool '-filename<CreateDate' -d GoPro_H7_%Y_%m_%d__%H_%M_%S_%%-c.%%le -r -ext MP4 -P GoPro/

Be aware this script renames ALL files matching MP4 in the directory

The same script, but for the iPhone content

#!/usr/bin/env bash iPhonePath="$1" echo "$iPhonePath" cd exiftool '-filename<CreateDate' -d iPhone_%Y_%m_%d__%H_%M_%S_%%-c.%%le -r -ext MOV -ext MP4 -P "$iPhonePath"

Update the blog

Once I have all my files in the right place, I start editing my images or cut a little video of the day.

As I also run a web-blog from our trip, I need to get some content uploaded rather quickly. I’ve decided to create a new folder called “4k”. This is where the final JPG’s are being rendered out.

The web-blog also has a image gallery, based on Piwigo. Here I can upload the “4k” images and then import them directly from the local (local to the server) disk with the “Quick-Sync” option. This way I do not duplicate the images.

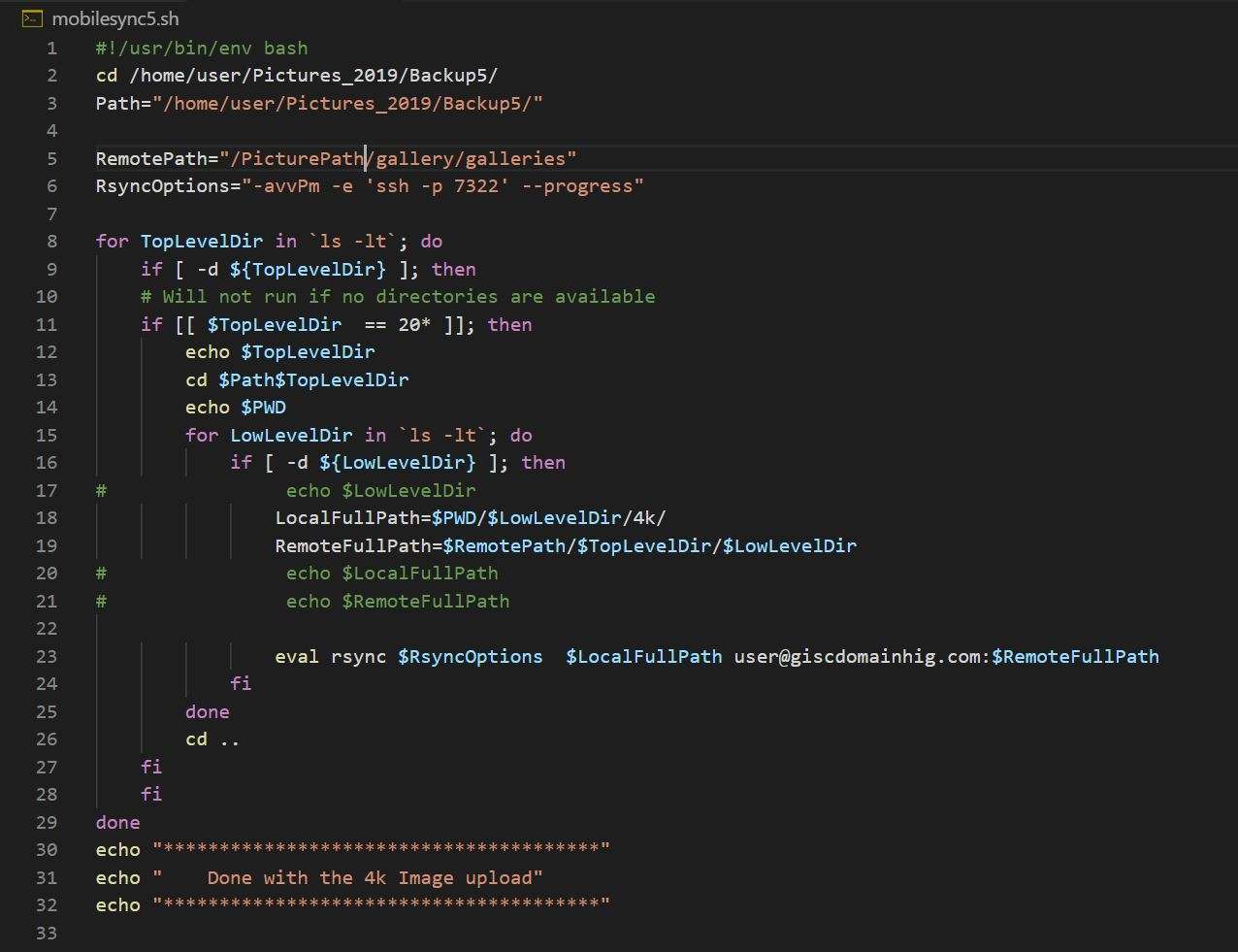

For this action I wrote a script which syncs all “4k” folders from my PC to the remote servers Piwigo image directory.

#!/usr/bin/env bash

cd /home/user/Pictures_2019/Backup/

Path="/home/user/Pictures_2019/Backup/"

RemotePath="/RemotePath/gallery/galleries"

RsyncOptions="-avvPm -e 'ssh -p 7322' --progress"

for TopLevelDir in `ls -lt`; do

if [ -d ${TopLevelDir} ]; then

# Will not run if no directories are available

if [[ $TopLevelDir == 20* ]]; then

echo $TopLevelDir

cd $Path$TopLevelDir

echo $PWD

for LowLevelDir in `ls -lt`; do

if [ -d ${LowLevelDir} ]; then

LocalFullPath=$PWD/$LowLevelDir/4k/

RemoteFullPath=$RemotePath/$TopLevelDir/$LowLevelDir

eval rsync $RsyncOptions $LocalFullPath user@domain.com:$RemoteFullPath

fi

done

cd ..

fi

fi

done

echo "***************************************"

echo " Done with the 4k Image upload"

echo "***************************************"

Backup

Local backup

I’ve brought along some SSD’s for the local backup, these are all about 500G in size, this means I can store about 3 backups on my Laptop. Once a SSD is full, I can send this back to Switzerland by mail. This way I have a 3 location backup in case I can not backup my content online. For the local backup I’ve used “robocopy” from windows.

Robocopy.exe .\2019\Backup5\ 'E:\' /e /MT

Online Backup

As the content is growing quite rapidly, I try to sync as often as I can. It turns out that most places cap the upload to about 5Mbit/s. If you do the math, you can upload about 50GB/24h, this sounds like a lot, but most campgrounds either had a data cap of 2GB or less (250MB!!) per device/day or a lot less bandwith. So uploading 50GB/24 is more a theoretical number. This script does not make it faster, but you can simple re-start the backup if the connection drops, and thrust me, this happens a lot.

I wrote a bash script based on rsync to copy my local data to my server back home, this is not really rocket science, but makes live a lot simpler 😉

#!/usr/bin/env bash

cd

# Backup folder Back3 into pictures 2019

rsync -avvP -e 'ssh -p 7322' --progress ./Backup3/ user@domain.com:/home/user/Pictures/2019/

# Backup folder Back4 into pictures 2019 and 2020

rsync -avvP -e 'ssh -p 7322' --progress ./Pictures_2019/Backup4/2019_12_13_New_Zealand_North_Island/ user@domain.com:/home/user/Pictures/2019/2019_12_13_New_Zealand_North_Island/

rsync -avvP -e 'ssh -p 7322' --progress ./Pictures_2019/Backup4/2020_01_22_New_Zealand_South_Island/ user@domain.com:/home/user/Pictures/2020/2020_01_22_New_Zealand_South_Island/

# Backup folder Back5 into pictures 2020

rsync -avvP -e 'ssh -p 7322' --progress ./Backup5/ user@domain.com:/home/user/Pictures/2020/